If you are considering using containers to execute an application, there are enormous choices for technologies to adopt. It can be hard and even challenging to choose the right one. One frequent subject is whether one should use Docker or Kubernetes for handling their application containers. This is a common question roaming around in the cloud computing environment. But the real fact is that Docker and Kubernetes have no serious rival for the stand their popularity or utilization. Instead, both are just different technologies that can run collectively in order to handle the application smoother.

Are you looking for best digital marketing training in coimbatore with real-time Project Experience? In this world of technological change, it is difficult to have any career in digital media.

What is Kubernetes?

Kubernetes is an open-source technology container management tool manifested in the Google platform. It supports you to lead a containerized application in multiple sorts of physical, pragmatic, and cloud ecosystems.

It is extremely versatile container software to perform even composite applications. Even the applications run on clusters of thousands of separate servers, Kubernetes enables you to handle the containerized application more efficiently and accomplish the application set.

What is Docker?

Docker is an open-source delicate containerization technology offered by Docker Inc. It has gained an excellent standing in the cloud computing and application container world. It enables you to automate the rapid execution of applications in delicate and flexible containers.

It is a mainframe tool used for Virtualization. It also enables you to manage various Operating systems on the identical host. Virtualization in Docker is executed on the system-scale, which is commonly called Docker containers.

Difference between Kubernetes and Docker Swarm

| Factor | Kubernetes | Docker Swarm |

| Developed By | Docker Inc | |

| Launched in the year | 2014 | 2013 |

| Installation | Complicated and time- consuming | Simple and fast |

| Cluster Configuration | the cluster is very strong | cluster is not very strong |

| Container Limit | Limited to 300000 containers | Limited to 95000 container |

| Compatibility | More comprehensive and highly customizable | Less extensive and customizable |

| GUI | Kubernetes Dashboard | No GUI |

| Public cloud service provider | Google, Azure, and AWS | Only Azure |

| Scaling | Auto-scaling | No Autoscaling |

| Load balancing | Manually load balance settings | Does auto load-balancing |

| Tolerance ratio | Low fault tolerance | High fault tolerance |

| Slave | Nodes | Worker |

| logging and monitoring tool support | provides an in-built tool for logging and monitoring | Enables to use a 3rd party tool like ELK |

| Github starts | 54.1 k | 53.8 k |

| Github forks | 18.7 k | 15.5 k |

| Organization Using | 9GAG, Intuit, Buffer, Evernote, etc. | Spotify, Pinterest, eBay, Twitter, etc. |

Characteristics of Kubernetes

- Proffers computerized scheduling

- Possess self- alleviating abilities

- Hold computerized rollouts and rollback

- Include Horizontal Scaling & Weight Balancing

- Presents a higher frequency of effective resource utilization

- Proffers business-equipped features

- Has an Application-oriented administration

- Possess Auto-scalable support

- You can generate expected infrastructure

- Equipped with programming paradigm configuration

- Extend and modernize software at measure

- Grants environment flexibility for developing, testing, and designing

Characteristics of Docker

- Hold Separate settings for maintaining your applications

- Include Simple Modeling

- Hold Version controller

- Present Installation/Connection

- Application Readiness

- Rich Developer Productivity

- Operational Proficiency

Benefits of using Kubernetes

- As Google develops it, it presents ages of worthy business experience to the board

- Smooth standard of service with storage

- Most comprehensive fellowship amongst container orchestration software

- It provides a diversity of storage possibilities, including on-premises SysAdmin, Audit, Network, and Security (SANS) and public cloud platform

- It holds with the standards of immutable infrastructure.

Benefits of Docker

- Provides a practical and more comfortable initial set up

- Combines and operates with existing Docker agents

- Enables you to define your application cycle in detail

- Docker lets the user trace the versions of their container quickly to analyze inconsistencies among earlier versions.

- Easy configuration, and communicate smoothly with Docker Compose.

- Docker provides a fast-paced setting that boosts up a virtual device and makes an app move in a virtual environment promptly.

- Documentation presents every piece of information.

- Affords fast and straightforward configuration to promote the productivity

- Makes sure that the application is confined

Drawbacks of using Kubernetes:

- Relocating to stateless demands numerous efforts

- Confined functionality based on the availability in the Docker Application Programming Interface (API).

- Extremely complicated Installation set up and configuration method

- Non-compatible current Docker command-line interface (CLI) and Design software

- Complicated standard cluster deployment and automated horizontal scaling set up

Drawbacks of using Docker:

- It does not present storage feasibility

- It has an inadequate monitoring facility

- Do not include automatic rescheduling of inactive Nodes

- Sophisticated setting up of automatic horizontal scaling

- All the activities should be implemented in the command-line interface (CLI)

- Possess only fundamental infrastructure approach

- Standard handling for various cases

- Require assistance for other mechanisms for production features like monitoring, alleviating, computing

- Complex manual cluster deployment

- Possess no proper care of condition-checks

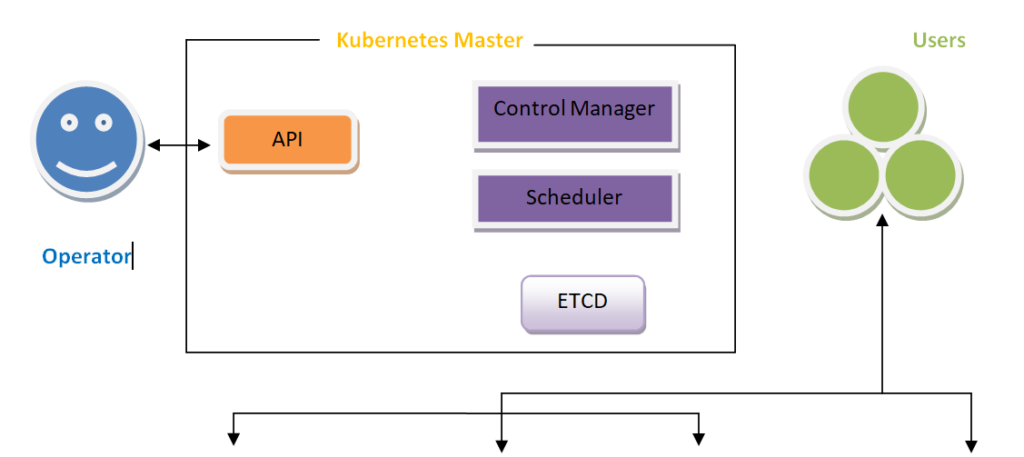

The two main components of Kubernetes cluster are:

- Node – the general term for VMs and bare-metal servers that Kubernetes handles.

- Pod – the term, this is a primary factor of deployment in Kubernetes.

A pod is a set of similar Docker containers that require coexisting. For instance, the webserver requires to be deployed with a Redis caching server that helps in encapsulating the two of them into a single pod. Kubernetes uses both side by side. If it executes the process more comfortable for you, you can imagine a pod comprising of a separate elegant container.

Sparkdatabox is one of the best Free online training course with certification They offer Oracle Database, Java, Apache Tomcat, SQL and other courses.

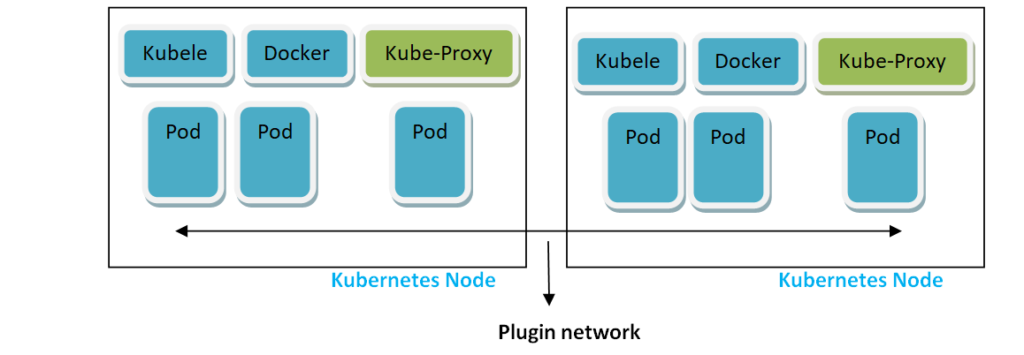

On the other hand, Node is of two types.

- Master Node – Where the core of Kubernetes is installed, and it manages the scheduling process of pods over multiple worker nodes

- Worker nodes – This is where the applications run. The work of the master node is to ensure that the aspired status of the cluster is managed.

Kubernetes Master includes:

Kube-controller-manager – accountable for taking into the record the current situation of the cluster (for example the number of operating pods) and making resolutions to obtain the expected case (for example, the number of active pods preferably). Kube-controller-manager hears on Kube-API server to know the cluster information.

Kube-API server: This API server reveals the gears and levers of Kubernetes. It is utilized by WebUI dashboards and command-line service like kubeclt. These services are indeed used by rational operators to communicate with the Kubernetes cluster.

Kube-scheduler: It determines how performances and tasks will be scheduled over the cluster based on resource availability, the design placed by operators, etc. Additionally, it hears from Kube-API server to know cluster information.

ETCD: It is a “storage stack” of the Kubernetes master nodes. It utilizes original value sets and is used to store policies, descriptions, codes, state of the operation, etc.

The worker node includes:

Kubelet: It sends the information of the condition of the node to the master and performs instructions assigned to it by the master node.

Kube-proxy: This network proxy enables multiple microservices of the application to interact among them, inside the cluster, and present the application to the system, if you expect it so. The matter of fact is that each pod can communicate to all other pods through this proxy.

Docker: This is the ultimate part of the mystery. Each node holds a docker engine to handle the containers.

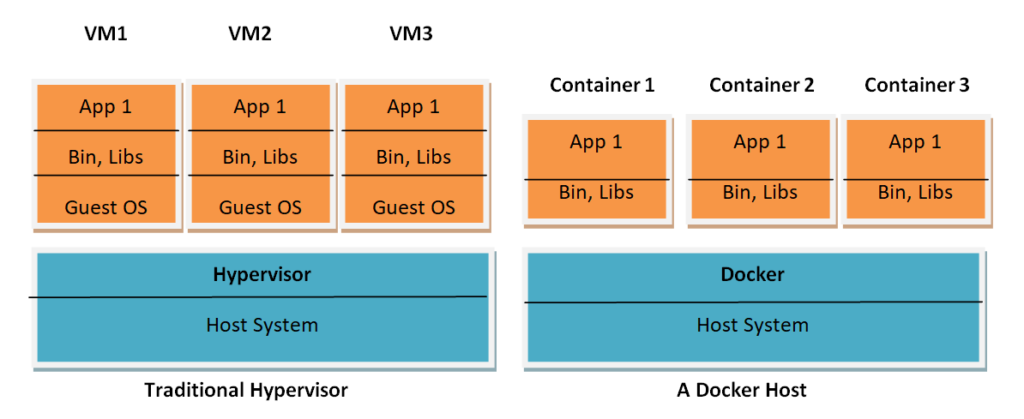

Architecture of Docker

Previously virtual machines are used by cloud service providers to separate running applications from one another. A host operating system renders virtual CPU, memory and other additional sources to various guest operating systems. Every guest OS works, assuming that it is running on an original physical device and it is, totally, ignorant of other guests racing on the corresponding physical server.

But, there are specific difficulties with virtualization. They are:

- The computing of resources demands time. This is because each visual disk image is vast, and it requires some time to make a Virtual Machine accessible for use.

- The system resources are utilized ineffectively. OS kernels are the controllers that need to handle every process. Therefore, when a guest OS considers 2GB of memory is accessible to it, it gets the key of that memory even if the applications operating on that OS uses only a part of it.

In contrast, when we operate containerized applications, the operating system is the one that is literally virtualized and not the hardware. Rather than affording a virtual device to a Virtual Machine, it is provided with a virtual OS to the application. So, you can able to drive multiplied applications, and if you require, you can also force constraints on their utilization of the resource. And each application will run overlooking to the number of other containers running beside.